Robots.txt

This section will help you understand how to configure your robots.txt file, including the ability to customise it according to your needs.

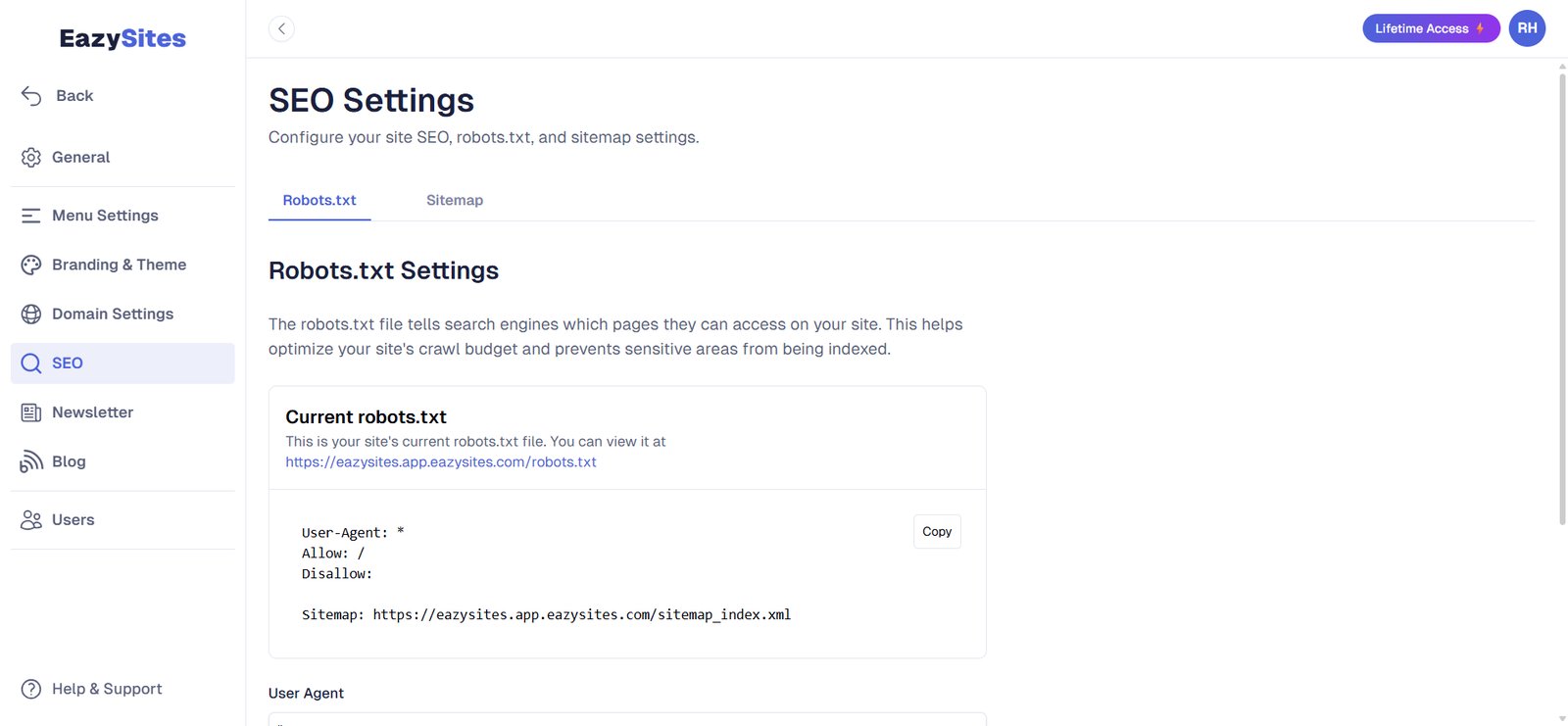

Default Robots.txt Rules

By default, your website is accessible to search engines for crawling and indexing. The current robots.txt file includes the following rules:

User-Agent: *

Allow: /

Disallow:

Sitemap: https://yourwebsite.com/sitemap_index.xml

Explanation of Default Rules

User-Agent:

*This line indicates that the rules apply to all web crawlers (search engine bots).Allow: The

/directive allows all pages on your website to be crawled by search engines.Disallow: An empty

Disallowdirective means there are no restrictions on crawling.Sitemap: This line specifies the location of your sitemap, allowing search engines to find it easily for better indexing.

This setup ensures that all search engines can crawl your entire website and access your sitemap, which is crucial for effective indexing.

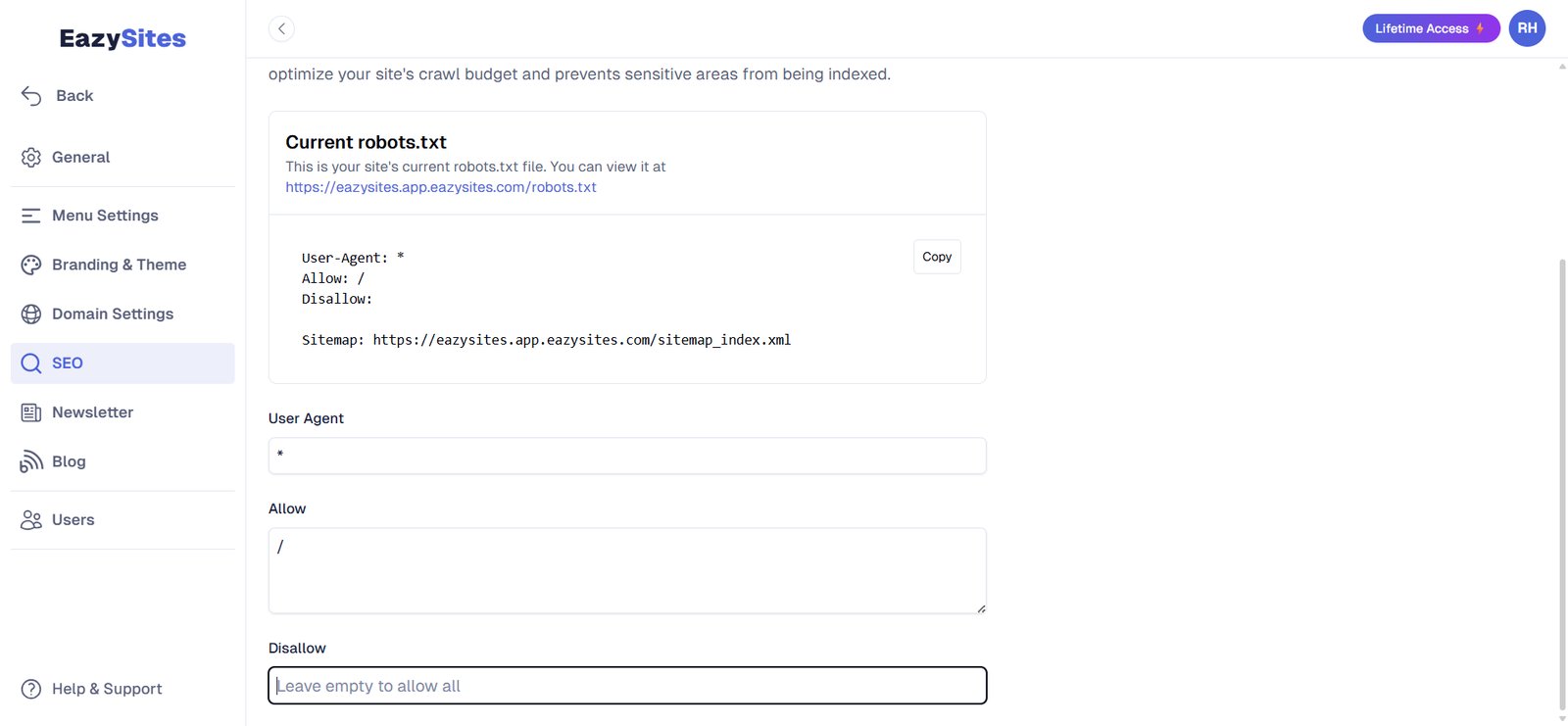

Customising Your Robots.txt File

Eazysites now allows you to customise your robots.txt file. You can modify the rules to block specific pages or bots as needed.

Here's how to customise it:

Navigate to Site settings.

Go to the SEO section.

Edit the Robots.txt fields for User Agent, Allow, and Disallow:

User Agent: Specify the user agent (e.g.,

*for all bots).

Allow: Specify which pages or directories to allow (e.g.,

/to allow all).

Disallow: Specify which pages or directories to block (e.g.,

/private/to block a specific directory).

Click Save to apply.

Example of Custom Rules

Here’s an example of a customised robots.txt configuration:

User-Agent: *

Allow: /

Disallow: /private/

Sitemap: https://yourwebsite.com/sitemap_index.xml

In this example, all pages are allowed for crawling except for those in the /private/ directory.

With the ability to customise your robots.txt file, you can better control how search engines interact with your website. This feature allows you to optimize your site's SEO by managing which pages are indexed. If you have any questions or need further assistance, please reach out to our support team. Happy optimising!

Written by Adam • Last updated: 9/15/2025